- 回首頁

- 機械工業雜誌

- 歷史雜誌

Real-time Camera Image Processing for Autonomous Vehicles 自駕車即時影像處理

作者 Denis Xynkin

刊登日期:2021/04/01

摘要

自動駕駛車處理大量數據且必須實時處理。在典型的轎車或廂式休旅車中,計算量存在尺寸、能量和熱量方面的限制。出於這一矛盾,各種設計折衷方案因應而生。本文介紹其中的一些方案:揭示具體的挑戰和特性;探討一些重要的設計選擇以及它們如何定義整體系統架構;討論攝影機介面的重要性;談論使用視訊壓縮的好處和風險;試圖定義一些有用的與視訊相關的影像摘要;指出硬體時戳的質變角色;描述HDR攝影機帶來的優勢,並展示了相關的計算負擔。

Abstract

Autonomous vehicles process immense amounts of data and they have to do it in real-time. There are dimensional, energy and thermal limitations on the amount of computation which reasonably can be placed in a typical sedan or minivan. Out of this contradiction various design compromises emerge. This article reveals some specific challenges and idiosyncrasies; looks into some important design choices and how they define the overall system architecture; argues the importance of a camera interface; talks about the benefits and perils of using video compression; attempts to define some useful video-related software abstractions; points out a qualitative-changing role of hardware timestamps; describes advantages HDR-cameras bring and shows associated computational burden.

Introduction

At times when taking a good photo with your phone is as simple as finding something worthy of a shot in real life, it might be puzzling for a new-comer to see the challenges associated with obtaining video data for an autonomous vehicle. This article attempts to give an overview of cameras-related problems our team has faced and some suggestions of how said problems may be addressed.

Our autonomous vehicles (targeted at levels 4 and 5) need somewhere between 6 to 12 cameras depending on a vehicle and a use case. The cameras we are using are 1920x1208@30FPS or 2048x1536@33FPS, which aggregates to around 2 GB of raw video data per second, which needs to be analyzed by various perception modules in real-time [1]. Given that this amount of data is generated by cameras only (there are many other types of sensors our cars use) and there is a rather modest limit on computing power a vehicle can carry, some compromises and careful loading distribution are needed. But even before a frame is received by any of the perception modules it undergoes a long series of conversions, which will be described in the Software section.

When developing software for a distributed system like an autonomous vehicle, a system as a whole needs to be considered, its advantages and limitations should be reflected in the design of each individual component. We hope to justify this statement in the Acquisition System section, where interactions between parts of our video acquisition and processing systems will be described.

But first of all - a camera itself: a lens - a frontier of our visual system; a sensor - a birthplace of the data; a firmware - a surprisingly capable preprocessing engine; and an interface - a highway to a place where lines of colorful pixels are realized as unnecessary fast cars, pesky scooters and painstakingly slow pedestrians. Next section gives an idea of what attributes of a camera might be considered when choosing one.

Camera

One of the most obvious attributes of a digital camera is its resolution. In order to estimate required resolution for a particular use case we need to start from a camera placement plan (made by a perception team). From such a plan we can learn about camera direction and FoV [2], along with this information we need to know what is the minimal size (width and height in pixels) of a car (for example) the perception software can recognize. Having this information, we need to decide how far away we would like to be able to recognize said object (a car in our case). These numbers make it possible to use an isosceles triangle to calculate how many meters per pixel of a frame we would get at said distance, hence how many pixels across the frame you need to represent a car with a sufficient amount of them.

Next attribute to determine is frame rate [3]. This is a temporal characteristic of a camera as opposed to resolution - spatial characteristic. For that you need to decide what would be your maximum autonomous driving speed, if let’s say, it’s 60 km/h and the camera FPS is 10, then you will get visual updates every 1.6 meters.

With this out of the way, it can be decided if global shutter [4] is required or rolling shutter [4] will suffice. Although our team uses both types of cameras, in this article we will focus on cameras with global shutter, since they are superior and readily available from various manufacturers.

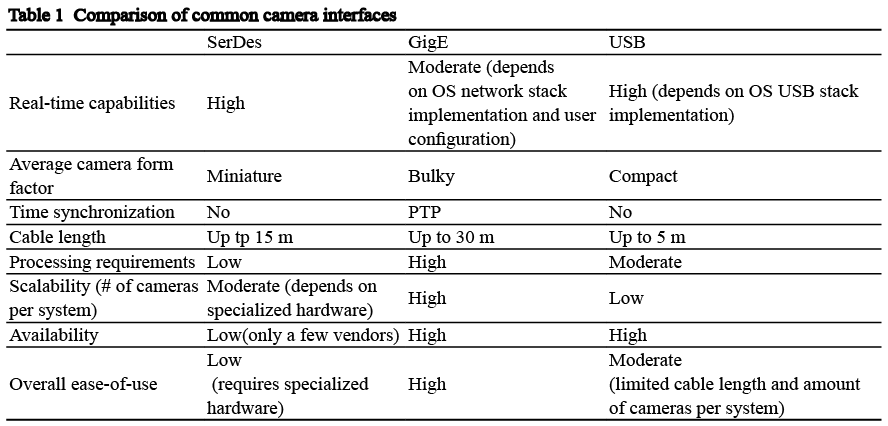

Having these three pieces of the puzzle figured out we can search for available sensors from semiconductor manufacturers and, after a suitable sensor is found, for available cameras based on the sensor we chose. At this point we need to choose a camera interface. It could be argued that this is a crucial decision, because the interface will define a large part of the whole acquisition system, its real time capabilities and future flexibility and extensibility [5]. A choice of the interface either may decouple your technology from hardware it is running on, or, on the contrary, tie you to a particular group of vendors and their willingness to invest in related platforms and SDKs [6]. Your ability to manage latency and synchronize camera on-board time will be defined by the interface as well. At the time of writing there are several common camera interfaces: GMSL [7, 8], GigE Vision [9], USB 3 [10] and FPD-link III [11]. For the sake of discussion in this article we assume that GMSL and FPD-link III are rather similar and combine them together under SerDes name.

As can be seen from Table 1, it is convenient to start development with GigE cameras and later, as your technology gets closer to a production stage, invest into SerDes-enabled platform. An important (and not immediately obvious) distinctinction between SerDes and other interfaces is that SerDes is a serialize/deserialize technology, which is interface-agnostic (to some extent). It can carry Ethernet, I2C, HDMI, MIPI CSI-2, LVDS, parallel LVCMOS, etc [8, 11]. This means that if a GMSL MIPI CSI-2 camera is chosen, the acquisition system should have CSI-related hardware and drivers along with GMSL-related hardware and drivers. It is common when CSI interface is a part of SoC and its drivers are provided along with a SDK, but SerDes link is developed by a third party. In this case there is some integration work and version dependencies, which might be a rather serious burden on a small company.

更完整的內容歡迎訂購 2021年04月號 (單篇費用:參考材化所定價)

主推方案

無限下載/年 5000元

NT$5,000元

訂閱送出